Health

We Don’t Truly Know if AI Is Taking Over All the things

Because the launch of ChatGPT final 12 months, I’ve heard some model of the identical factor again and again: What’s going on? The frenzy of chatbots and countless “AI-powered” apps has made starkly clear that this know-how is poised to upend all the things—or, not less than, one thing. But even the AI consultants are battling a dizzying feeling that for all of the speak of its transformative potential, a lot about this know-how is veiled in secrecy.

It isn’t only a feeling. An increasing number of of this know-how, as soon as developed by open analysis, has grow to be nearly fully hidden inside companies which can be opaque about what their AI fashions are able to and the way they’re made. Transparency isn’t legally required, and the secrecy is inflicting issues: Earlier this 12 months, The Atlantic revealed that Meta and others had used almost 200,000 books to coach their AI fashions with out the compensation or consent of the authors.

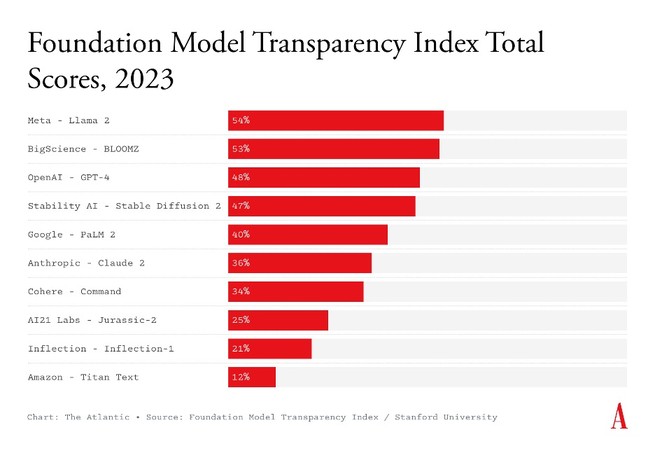

Now we’ve a solution to measure simply how unhealthy AI’s secrecy drawback truly is. Yesterday, Stanford College’s Middle for Analysis on Foundational Fashions launched a brand new index that tracks the transparency of 10 main AI firms, together with OpenAI, Google, and Anthropic. The researchers graded every firm’s flagship mannequin based mostly on whether or not or not its builders publicly disclosed 100 totally different items of data—comparable to what information it was skilled on, the wages paid to the information and content-moderation staff who had been concerned in its improvement, and when the mannequin ought to not be used. One level was awarded for every disclosure. Among the many 10 firms, the highest-scoring barely acquired greater than 50 out of the 100 potential factors; the typical is 37. Each firm, in different phrases, will get a powerful F.

Take OpenAI, which was named to point a dedication to transparency. Its flagship mannequin, GPT-4, scored a 48—shedding vital factors for not revealing info comparable to the information that had been fed into it, the way it handled personally identifiable info which will have been captured in mentioned scraped information, and the way a lot power was used to provide the mannequin. Even Meta, which has prided itself on openness by permitting individuals to obtain and adapt its mannequin, scored solely 54 factors. “A means to consider it’s: You’re getting baked cake, and you’ll add decorations or layers to that cake,” says Deborah Raji, an AI accountability researcher at UC Berkeley who wasn’t concerned within the analysis. “However you don’t get the recipe guide for what’s truly within the cake.”

Learn: These 183,000 Books Are Fueling the Largest Combat in Publishing and Tech

Many firms, together with OpenAI and Anthropic, have held that they maintain such info secret for aggressive causes or to stop dangerous proliferation of their know-how, or each. I reached out to the ten firms listed by the Stanford researchers. An Amazon spokesperson mentioned the corporate seems to be ahead to rigorously reviewing the index. Margaret Mitchell, a researcher and chief ethics scientist at Hugging Face, mentioned the index misrepresented BLOOMZ because the agency’s mannequin; it was in actual fact produced by a global analysis collaboration referred to as the BigScience venture that was co-organized by the corporate. (The Stanford researchers acknowledge this within the physique of the report. For that reason, I marked BLOOMZ as a BigScience mannequin, not a Hugging Face one, on the chart above.) OpenAI and Cohere declined a request for remark. Not one of the different firms responded.

The Stanford researchers chosen the 100 standards based mostly on years of current AI analysis and coverage work, specializing in inputs into every mannequin, info in regards to the mannequin itself, and the ultimate product’s downstream impacts. For instance, the index references scholarly and journalistic investigations into the poor pay for information staff that assist good AI fashions to clarify its willpower that the businesses ought to specify whether or not they straight make use of the employees and any labor protections they put in place. The lead creators of the index, Rishi Bommasani and Kevin Klyman, instructed me they tried to bear in mind the sorts of disclosures that will be most useful to a spread of various teams: scientists conducting impartial analysis about these fashions, coverage makers designing AI regulation, and customers deciding whether or not to make use of a mannequin in a selected state of affairs.

Along with insights about particular fashions, the index reveals industry-wide gaps of data. Not a single mannequin the researchers assessed supplies details about whether or not the information it was skilled on had copyright protections or different guidelines limiting their use. Nor do any fashions disclose ample details about the authors, artists, and others whose works had been scraped and used for coaching. Most firms are additionally tight-lipped in regards to the shortcomings of their fashions, whether or not their embedded biases or how typically they make issues up.

That each firm performs so poorly is an indictment on the {industry} as a complete. In truth, Amba Kak, the manager director of the AI Now Institute, instructed me that in her view, the index was not excessive sufficient of a normal. The opacity throughout the {industry} is so pervasive and ingrained, she instructed me, that even 100 standards don’t totally reveal the issues. And transparency just isn’t an esoteric concern: With out full disclosures from firms, Raji instructed me, “it’s a one-sided narrative. And it’s nearly all the time the optimistic narrative.”

Learn: AI’s Current Issues Extra Than Its Imagined Future

In 2019, Raji co-authored a paper displaying that a number of facial-recognition merchandise labored poorly on ladies and other people of coloration, together with ones being offered to the police. The analysis make clear the danger of legislation enforcement utilizing defective know-how. As of August, there have been six reported circumstances of police falsely accusing individuals of a criminal offense within the U.S. based mostly on flawed facial recognition; the entire accused are Black. These newest AI fashions pose comparable dangers, Raji mentioned. With out giving coverage makers or impartial researchers the proof they should audit and again up company claims, AI firms can simply inflate their capabilities in ways in which lead customers or third-party app builders to make use of defective or insufficient know-how in essential contexts comparable to prison justice and well being care.

There have been uncommon exceptions to the industry-wide opacity. One mannequin not included within the index is BLOOM, which was equally produced by the BigScience venture (however is totally different from BLOOMZ). The researchers for BLOOM carried out one of many few analyses out there so far of the broader environmental impacts of large-scale AI fashions and likewise documented details about information creators, copyright, personally identifiable info, and supply licenses for the coaching information. It exhibits that such transparency is potential. However altering {industry} norms would require regulatory mandates, Kak instructed me. “We can not depend on researchers and the general public to be piecing collectively this map” of data, she mentioned.

Maybe the most important clincher is that throughout the board, the tracker finds that the entire firms have notably abysmal disclosures in “influence” standards, which incorporates the variety of customers who use their product, the purposes being constructed on high of the know-how, and the geographic distribution of the place these applied sciences are being deployed. This makes it far tougher for regulators to trace every agency’s sphere of management and affect, and to maintain them accountable. It’s a lot tougher for customers as nicely: If OpenAI know-how helps your child’s trainer, helping your loved ones physician, and powering your workplace productiveness instruments, it’s possible you’ll not even know. In different phrases, we all know so little about these applied sciences we’re coming to depend on that we will’t even say how a lot we depend on them.

Secrecy, in fact, is nothing new in Silicon Valley. Practically a decade in the past, the tech and authorized scholar Frank Pasquale coined the phrase black-box society to discuss with the best way tech platforms had been rising ever extra opaque as they solidified their dominance in individuals’s lives. “Secrecy is approaching essential mass, and we’re in the dead of night about essential selections,” he wrote. And but, regardless of the litany of cautionary tales from different AI applied sciences and social media, many individuals have grown comfy with black packing containers. Silicon Valley spent years establishing a brand new and opaque norm; now it’s simply accepted as part of life.

Related Posts

- MEMIC opts Gradient AI instruments to boost claims administration operations

Gradient AI’s Claims Benchmarking and Whole Incurred Prediction options shall be utilized by MEMIC to…

- CLARA Analytics raises $24m to expedite AI use in insurance coverage claims

The funding proceeds shall be used to additional bolster the AI capabilities of CLARA Analytics’…

- Sprout.ai raises £5.4m funding to remodel claims processing

Picture by Gerd Altmann from Pixabay. The funding might be used for the Sprout.ai go-to-market…