Health Care

The World Will By no means Know the Reality About YouTube’s Rabbit Holes

Across the time of the 2016 election, YouTube grew to become recognized as a house to the rising alt-right and to massively in style conspiracy theorists. The Google-owned web site had greater than 1 billion customers and was taking part in host to charismatic personalities who had developed intimate relationships with their audiences, doubtlessly making it a robust vector for political affect. On the time, Alex Jones’s channel, Infowars, had greater than 2 million subscribers. And YouTube’s advice algorithm, which accounted for almost all of what folks watched on the platform, appeared to be pulling folks deeper and deeper into harmful delusions.

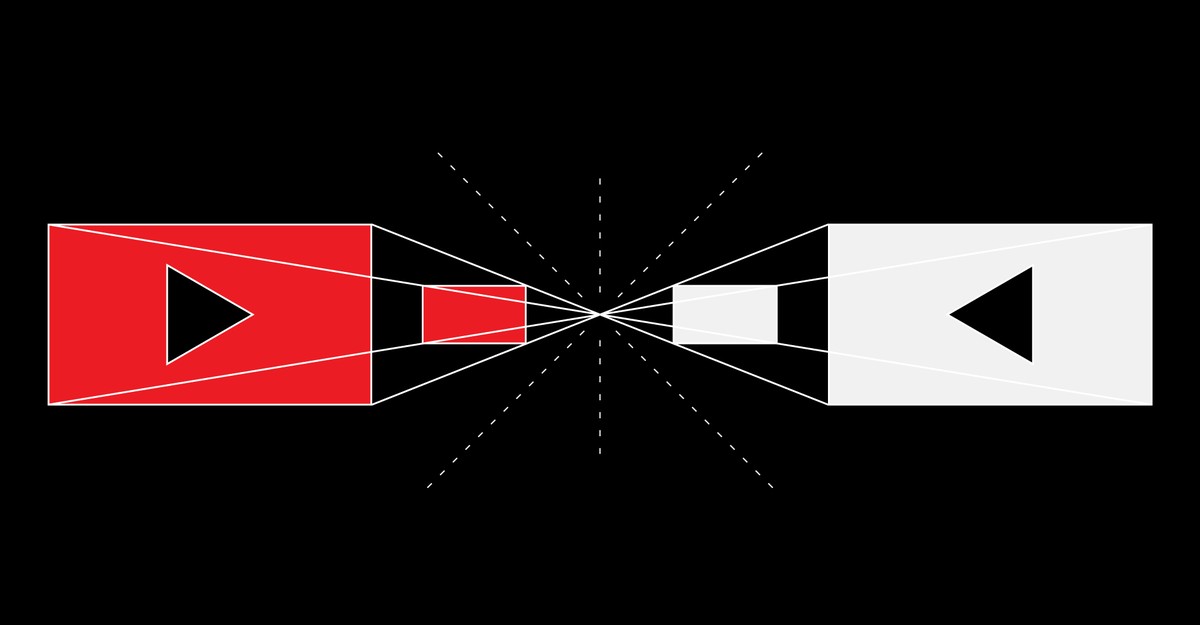

The method of “falling down the rabbit gap” was memorably illustrated by private accounts of people that had ended up on unusual paths into the darkish coronary heart of the platform, the place they have been intrigued after which satisfied by extremist rhetoric—an curiosity in critiques of feminism may lead to males’s rights after which white supremacy after which requires violence. Most troubling is that an individual who was not essentially on the lookout for excessive content material might find yourself watching it as a result of the algorithm observed a whisper of one thing of their earlier selections. It might exacerbate an individual’s worst impulses and take them to a spot they wouldn’t have chosen, however would have hassle getting out of.

Simply how large a rabbit-hole drawback YouTube had wasn’t fairly clear, and the corporate denied it had one in any respect even because it was making modifications to handle the criticisms. In early 2019, YouTube introduced tweaks to its advice system with the purpose of dramatically decreasing the promotion of “dangerous misinformation” and “borderline content material” (the sorts of movies that have been nearly excessive sufficient to take away, however not fairly). On the identical time, it additionally went on a demonetizing spree, blocking shared-ad-revenue applications for YouTube creators who disobeyed its insurance policies about hate speech.No matter else YouTube continued to permit on its web site, the thought was that the rabbit gap can be stuffed in.

A brand new peer-reviewed research, printed at the moment in Science Advances, means that YouTube’s 2019 replace labored. The analysis staff was led by Brendan Nyhan, a authorities professor at Dartmouth who research polarization within the context of the web. Nyhan and his co-authors surveyed 1,181 folks about their current political attitudes after which used a customized browser extension to watch all of their YouTube exercise and suggestions for a interval of a number of months on the finish of 2020. It discovered that extremist movies have been watched by solely 6 % of members. Of these folks, the bulk had intentionally subscribed to not less than one extremist channel, which means that they hadn’t been pushed there by the algorithm. Additional, these folks have been typically coming to extremist movies from exterior hyperlinks as a substitute of from inside YouTube.

These viewing patterns confirmed no proof of a rabbit-hole course of because it’s sometimes imagined: Somewhat than naive customers immediately and unwittingly discovering themselves funneled towards hateful content material, “we see folks with very excessive ranges of gender and racial resentment in search of this content material out,” Nyhan instructed me. That persons are primarily viewing extremist content material by subscriptions and exterior hyperlinks is one thing “solely [this team has] been in a position to seize, due to the tactic,” says Manoel Horta Ribeiro, a researcher on the Swiss Federal Institute of Know-how Lausanne who wasn’t concerned within the research. Whereas many earlier research of the YouTube rabbit gap have had to make use of bots to simulate the expertise of navigating YouTube’s suggestions—by clicking mindlessly on the subsequent recommended video time and again and over—that is the primary that obtained such granular information on actual, human habits.

The research does have an unavoidable flaw: It can not account for something that occurred on YouTube earlier than the info have been collected, in 2020. “It could be the case that the vulnerable inhabitants was already radicalized throughout YouTube’s pre-2019 period,” as Nyhan and his co-authors clarify within the paper. Extremist content material does nonetheless exist on YouTube, in spite of everything, and a few folks do nonetheless watch it. So there’s a chicken-and-egg dilemma: Which got here first, the extremist who watches movies on YouTube, or the YouTuber who encounters extremist content material there?

Inspecting at the moment’s YouTube to attempt to perceive the YouTube of a number of years in the past is, to deploy one other metaphor, “slightly bit ‘apples and oranges,’” Jonas Kaiser, a researcher at Harvard’s Berkman Klein Heart for Web and Society who wasn’t concerned within the research, instructed me. Although he considers it a strong research, he mentioned he additionally acknowledges the issue of studying a lot a few platform’s previous by taking a look at one pattern of customers from its current. This was additionally a major concern with a set of latest research about Fb’s position in political polarization, which have been printed final month (Nyhan labored on certainly one of them). These research demonstrated that, though echo chambers on Fb do exist, they don’t have main results on folks’s political attitudes at the moment. However they couldn’t exhibit whether or not the echo chambers had already had these results lengthy earlier than the research.

The brand new analysis remains to be essential, partly as a result of it proposes a selected, technical definition of rabbit gap. The time period has been utilized in other ways in frequent speech and even in tutorial analysis. Nyhan’s staff outlined a “rabbit gap occasion” as one wherein an individual follows a advice to get to a extra excessive kind of video than they have been beforehand watching. They’ll’t have been subscribing to the channel they find yourself on, or to equally excessive channels, earlier than the advice pushed them. This mechanism wasn’t frequent of their findings in any respect. They noticed it act on only one % of members, accounting for under 0.002 % of all views of extremist-channel movies.

That is nice to know. However, once more, it doesn’t imply that rabbit holes, because the staff outlined them, weren’t at one level an even bigger drawback. It’s only a good indication that they appear to be uncommon proper now. Why did it take so lengthy to go on the lookout for the rabbit holes? “It’s a disgrace we didn’t catch them on either side of the change,” Nyhan acknowledged. “That might have been very best.” Nevertheless it took time to construct the browser extension (which is now open supply, so it may be utilized by different researchers), and it additionally took time to give you an entire bunch of cash. Nyhan estimated that the research obtained about $100,000 in funding, however a further Nationwide Science Basis grant that went to a separate staff that constructed the browser extension was large—nearly $500,000.

Nyhan was cautious to not say that this paper represents a complete exoneration of YouTube. The platform hasn’t stopped letting its subscription function drive visitors to extremists. It additionally continues to permit customers to publish extremist movies. And studying that solely a tiny proportion of customers stumble throughout extremist content material isn’t the identical as studying that nobody does; a tiny proportion of a gargantuan person base nonetheless represents numerous folks.

This speaks to the broader drawback with final month’s new Fb analysis as nicely: Individuals need to perceive why the nation is so dramatically polarized, and folks have seen the massive modifications in our know-how use and knowledge consumption within the years when that polarization grew to become most blatant. However the net modifications day-after-day. Issues that YouTube not needs to host might nonetheless discover large audiences, as a substitute, on platforms corresponding to Rumble; most younger folks now use TikTok, a platform that hardly existed once we began speaking in regards to the results of social media. As quickly as we begin to unravel one thriller about how the web impacts us, one other one takes its place.

Related Posts

- Navigating the World of World Education – Hype Hair

Phot Credit score: Adobe Inventory Written By: Amber Nichols World education is a dynamic strategy…

- World Athletics report card: how did Budapest carry out at World Championships?

The 2023 World Athletics Championships held in Budapest left a long-lasting impression, showcasing outstanding races…

- Know the reality about insurance coverage

Simply as you'd earlier than any necessary buy, perform a little research earlier than shopping…