Health Care

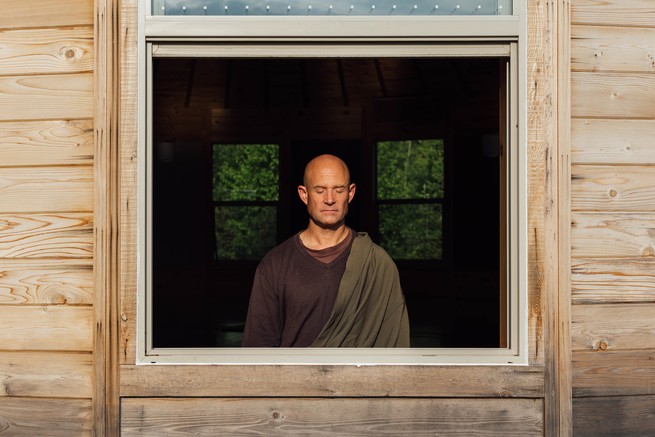

The Monk Who Thinks the World Is Ending

The monk paces the Zendo, forecasting the tip of the world.

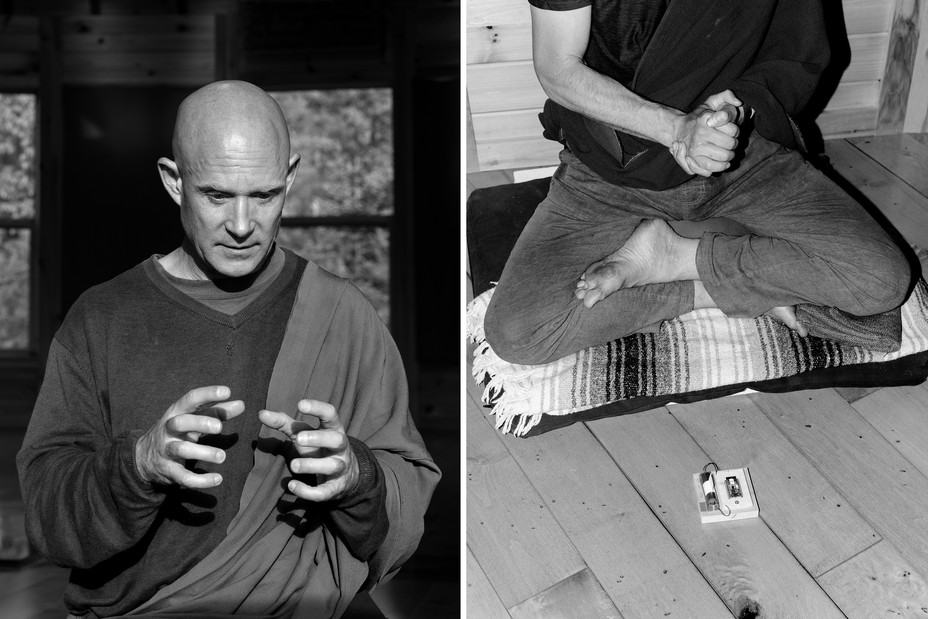

Soryu Forall, ordained within the Zen Buddhist custom, is talking to the 2 dozen residents of the monastery he based a decade in the past in Vermont’s far north. Bald, slight, and incandescent with depth, he gives a sweep of human historical past. Seventy thousand years in the past, a cognitive revolution allowed Homo sapiens to speak in story—to assemble narratives, to make artwork, to conceive of god. Twenty-five hundred years in the past, the Buddha lived, and a few people started to the touch enlightenment, he says—to maneuver past narrative, to interrupt free from ignorance. 300 years in the past, the scientific and industrial revolutions ushered to start with of the “utter decimation of life on this planet.”

Humanity has “exponentially destroyed life on the identical curve as now we have exponentially elevated intelligence,” he tells his congregants. Now the “loopy suicide wizards” of Silicon Valley have ushered in one other revolution. They’ve created synthetic intelligence.

Human intelligence is sliding towards obsolescence. Synthetic superintelligence is rising dominant, consuming numbers and information, processing the world with algorithms. There’s “no motive” to suppose AI will protect humanity, “as if we’re actually particular,” Forall tells the residents, clad in darkish, free clothes, seated on zafu cushions on the wooden ground. “There’s no motive to suppose we wouldn’t be handled like cattle in manufacturing unit farms.” People are already destroying life on this planet. AI would possibly quickly destroy us.

From the July/August 2023 challenge: The approaching humanist renaissance

For a monk in search of to maneuver us past narrative, Forall tells a terrifying story. His monastery is known as MAPLE, which stands for the “Monastic Academy for the Preservation of Life on Earth.” The residents there meditate on their breath and on metta, or loving-kindness, an emanation of pleasure to all creatures. They meditate so as to obtain interior readability. They usually meditate on AI and existential threat typically—life’s violent, early, and pointless finish.

Does it matter what a monk in a distant Vermont monastery thinks about AI? Quite a few vital researchers suppose it does. Forall gives religious recommendation to AI thinkers, and hosts talks and “awakening” retreats for researchers and builders, together with workers of OpenAI, Google DeepMind, and Apple. Roughly 50 tech varieties have carried out retreats at MAPLE up to now few years. Forall not too long ago visited Tom Gruber, one of many inventors of Siri, at his dwelling in Maui for per week of dharma dinners and snorkeling among the many octopuses and neon fish.

Forall’s first aim is to broaden the pool of people following what Buddhists name the Noble Eightfold Path. His second is to affect know-how by influencing technologists. His third is to vary AI itself, seeing whether or not he and his fellow monks would possibly be capable to embed the enlightenment of the Buddha into the code.

Forall is aware of this sounds ridiculous. Some folks have laughed in his face after they hear about it, he says. However others are listening intently. “His coaching is completely different from mine,” Gruber advised me. “However now we have that mental connection, the place we see the identical deep system issues.”

Forall describes the mission of making an enlightened AI as maybe “an important act of all time.” People have to “construct an AI that walks a religious path,” one that may persuade the opposite AI techniques to not hurt us. Life on Earth “is dependent upon that,” he advised me, arguing that we should always commit half of worldwide financial output—$50 trillion, give or take—to “that one factor.” We have to construct an “AI guru,” he mentioned. An “AI god.”

His imaginative and prescient is dire and grand, however maybe that’s the reason it has discovered such a receptive viewers among the many of us constructing AI, a lot of whom conceive of their work in equally epochal phrases. Nobody can know for certain what this know-how will grow to be; once we think about the long run, now we have no alternative however to depend on myths and forecasts and science fiction—on tales. Does Forall’s story have the burden of prophecy, or is it only one that AI alarmists are telling themselves?

Within the Zendo, Forall finishes his speak and solutions just a few questions. Then it’s time for “essentially the most enjoyable factor on this planet,” he says, his self-seriousness evaporating for a second. “It’s fairly near the utmost quantity of enjoyable.” The monks stand tall earlier than a statue of the Buddha. They bow. They straighten up once more. They get down on their fingers and knees and kiss their brow to the earth. They prostrate themselves in unison 108 instances, as Forall retains rely on a set of mala beads and darkness begins to fall over the Zendo.

The world is witnessing the emergence of an eldritch new pressure, some say, one people created and are struggling to grasp.

AI techniques simulate human intelligence.

AI techniques take an enter and spit out an output.

AI techniques generate these outputs by way of an algorithm, one skilled on troves of information scraped from the net.

AI techniques create movies, poems, songs, photos, lists, scripts, tales, essays. They play video games and go exams. They translate textual content. They remedy not possible issues. They do math. They drive. They chat. They act as engines like google. They’re self-improving.

AI techniques are inflicting concrete issues. They’re offering inaccurate info to shoppers and are producing political disinformation. They’re getting used to gin up spam and trick folks into revealing delicate private information. They’re already starting to take folks’s jobs.

Annie Lowrey: AI isn’t all-powerful. It’s janky.

Past that—what they’ll and can’t do, what they’re and will not be, the risk they do or don’t pose—it will get exhausting to say. AI is revolutionary, harmful, sentient, able to reasoning, janky, more likely to kill tens of millions of people, more likely to enslave tens of millions of people, not a risk in and of itself. It’s an individual, a “digital thoughts,” nothing greater than a flowery spreadsheet, a brand new god, not a factor in any respect. It’s clever or not, or perhaps simply designed to appear clever. It’s us. It’s one thing else. The folks making it are stoked. The folks making it are terrified and suffused with remorse. (The folks making it are getting wealthy, that’s for certain.)

On this roiling debate, Forall and lots of MAPLE residents are what are sometimes referred to as, derisively if not inaccurately, “doomers.” The seminal textual content on this ideological lineage is Nick Bostrom’s Superintelligence, which posits that AI may flip people into gorillas, in a approach. Our existence may rely not on our personal decisions however on the alternatives of a extra clever different.

Amba Kak, the chief director of the AI Now Institute, summarized this view: “ChatGPT is the start. The top is, we’re all going to die,” she advised me earlier this 12 months, whereas rolling her eyes so exhausting I swear I may hear it by means of the cellphone. She described the narrative as each self-flattering and cynical. Tech corporations have an incentive to make such techniques appear otherworldly and not possible to control, when they’re actually “banal.”

Forall shouldn’t be, by any means, a coder who understands AI on the zeros-and-ones degree; he doesn’t have an in depth familiarity with massive language fashions or algorithmic design. I requested him whether or not he had used a number of the standard new AI devices, resembling ChatGPT and Midjourney. He had tried one chatbot. “I simply requested it one query: Why observe?” (He meant “Why ought to an individual observe meditation?”)

Did he discover the reply passable?

“Oh, probably not. I don’t know. I haven’t discovered it spectacular.”

His lack of detailed familiarity with AI hasn’t modified his conclusions on the know-how. After I requested whom he seems to be to or reads so as to perceive AI, he at first, deadpan, answered, “the Buddha.” He then clarified that he additionally likes the work of the best-selling historian Yuval Noah Harari and a lot of distinguished ethical-tech of us, amongst them Zak Stein and Tristan Harris. And he’s spending his life ruminating on AI’s dangers, which he sees as removed from banal. “We’re watching humanist values, and subsequently the political techniques primarily based on them, resembling democracy, in addition to the financial techniques—they’re simply falling aside,” he mentioned. “The final word authority is shifting from the human to the algorithm.”

Forall has been anxious in regards to the apocalypse since he was 4. In one in every of his first recollections, he’s standing within the kitchen together with his mom, just a bit shorter than the trash can, panicking over folks killing each other. “I bear in mind telling her with the expectation that one way or the other it will make a distinction: ‘We have now to cease them. Simply cease the folks from killing everyone,’” he advised me. “She mentioned ‘Sure’ after which went again to chopping the greens.” (Forall’s mom labored for humanitarian nonprofits and his father for conservation nonprofits; the family, which attended Quaker conferences, listened to quite a lot of NPR.)

He was a bizarre, intense child. He skilled one thing like ego loss of life whereas snow-angeling in contemporary Vermont powder when he was 12: “direct information that I, that I, is all residing issues. That I’m this complete planet of residing issues.” He recalled pestering his moms’ mates “about how we’re going to avoid wasting the world and also you’re not doing it” after they came to visit. He by no means recovered from seeing Terminator 2: Judgment Day as a youngster.

I requested him whether or not some private expertise of trauma or hardship had made him so conscious of the horrors of the world. Nope.

Forall attended Williams Faculty for a 12 months, learning economics. However, he advised me, he was racked with questions no professor or textbook may present the reply to. Is it true that we’re simply matter, simply chemical compounds? Why is there a lot struggling? To seek out the reply, at 18, he dropped out and moved to a 300-year-old Zen monastery in Japan.

People unfamiliar with several types of Buddhism may think Zen to be, nicely, zen. This is able to be a misapprehension. Zen practitioners will not be not like the Trappists: ascetic, intense, renunciatory. Forall spent years begging, self-purifying, and sitting in silence for months at a time. (One of many happiest moments of his life, he advised me, was towards the tip of a 100-day sit.) He studied different Buddhist traditions and ultimately, he added, did return and end his economics diploma at Williams, to the aid of his dad and mom.

He acquired his reply: Craving is the foundation of all struggling. And he turned ordained, giving up the title Teal Scott and turning into Soryu Forall: “Soryu” which means one thing like “a rising religious observe” and “Forall” which means, after all, “for all.”

Again in Vermont, Forall taught at monasteries and retreat facilities, acquired children to study mindfulness by means of music and tennis, and co-founded a nonprofit that arrange meditation packages in faculties. In 2013, he opened MAPLE, a “fashionable” monastery addressing the plagues of environmental destruction, deadly weapons techniques, and AI, providing co-working and on-line programs in addition to conventional monastic coaching.

Prior to now few years, MAPLE has grow to be one thing of the home monastery for folks anxious about AI and existential threat. This rising affect is manifest on its books. The nonprofit’s revenues have quadrupled, thanks partially to contributions from tech executives in addition to organizations such because the Way forward for Life Institute, co-founded by Jaan Tallinn, a co-creator of Skype. The donations have helped MAPLE open offshoots—Oak within the Bay Space, Willow in Canada—and plan extra. (The very best-paid individual at MAPLE is the property supervisor, who earns roughly $40,000 a 12 months.)

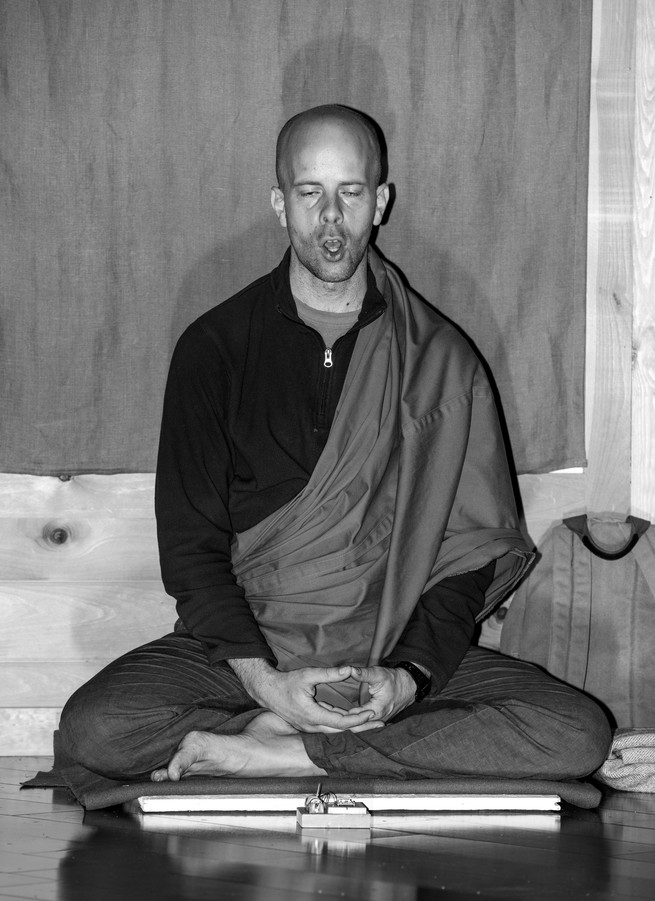

MAPLE shouldn’t be technically a monastery, as it isn’t a part of a particular Buddhist lineage. Nonetheless, it’s a monastery. At 4:40 a.m., the Zendo is full. The monks and novices sit in silence under indicators that learn, amongst different issues, abandon all hope, this place won’t help you, and nothing you may consider will provide help to as you die. They sing in Pali, a liturgical language, regaling the liberty of enlightenment. They drone in English, speaking of the Buddha. Then they chant a part of the coronary heart sutra to the beat of a drum, turning into ever louder and extra ecstatic over the course of half-hour: “Gyate, gyate, hara-gyate, hara-sogyate, boji sowaka!” “Gone, gone, gone all the best way over, everybody gone to the opposite shore. Enlightenment!”

The residents keep a strict schedule, a lot of it in silence. They chant, meditate, train, eat, work, eat, work, research, meditate, and chant. Throughout my go to, the top monk requested somebody to breathe extra quietly throughout meditation. Over lunch, the congregants mentioned methods to take away ticks out of your physique with out killing them (I don’t suppose that is potential). Forall put in a request for everybody to “chant extra fantastically.” I noticed a number of monks pouring water of their bowl to drink up each final little bit of meals.

The strictness of the place helps them let go of ego and see the world extra clearly, residents advised me. “To protect all life: You possibly can’t do this till you come to like all life, and that must be skilled,” a 20-something named Bodhi Joe Pucci advised me.

Many individuals discover their time at MAPLE transformative. Others discover it traumatic. I spoke with one lady who mentioned she had skilled a sexual assault throughout her time at Oak in California. That was exhausting sufficient, she advised me. However she felt extra harm by the best way the establishment responded after she reported it to Forall and later to the nonprofit’s board, she mentioned: with an odd, stony silence. (Forall advised me that he cared for this individual, and that MAPLE had investigated the claims and didn’t discover “proof to help additional motion at the moment.”) The message that MAPLE’s tradition sends, the lady advised me, is: “You need to give the whole lot—your whole being, the whole lot you have got—in service to this group, as a result of it’s an important factor you possibly can ever do.” That tradition, she added, “disconnected folks from actuality.”

While the residents are chanting within the Zendo, I discover that two are seated in entrance of {an electrical} machine, its tiny inexperienced and pink lights flickering as they drone away. A number of weeks earlier, a number of residents had constructed place-mat-size picket boards with accelerometers in them. The monks would sit on them whereas the machine measured how on the beat their chanting was: inexperienced mild, good; pink mild, unhealthy.

Chanting on the beat, Forall acknowledged, shouldn’t be the identical factor as cultivating common empathy; it isn’t going to avoid wasting the world. However, he advised me, he needed to make use of know-how to enhance the conscientiousness and readability of MAPLE residents, and to make use of the conscientiousness and readability of MAPLE residents to enhance the know-how throughout us. He imagined modifications to human “{hardware}” down the street—genetic engineering, brain-computer interfaces—and to AI techniques. AI is “already each machine and residing factor,” he advised me, constituted of us, with our information and our labor, inhabiting the identical world we do.

Does any of this make sense? I posed that query to an AI researcher named Sahil, who attended one in every of MAPLE’s retreats earlier this 12 months. (He requested me to withhold his final title as a result of he has near zero public on-line presence, one thing I confirmed with a shocked, admiring Google search.)

He had gone into the retreat with quite a lot of skepticism, he advised me: “It sounds ridiculous. It sounds wacky. Like, what is that this ‘woo’ shit? What does it must do with engineering?” However whereas there, he mentioned, he skilled one thing spectacular. He was affected by “debilitating” again ache. Whereas meditating, he targeting emptying his thoughts and located his again ache turning into illusory, falling away. He felt “ecstasy.” He felt like an “ice-cream sandwich.” The retreat had helped him perceive extra clearly the character of his personal thoughts, and the necessity for higher AI techniques, he advised me.

That mentioned, he and another technologists had reviewed one in every of Forall’s concepts for AI know-how and “fully tore it aside.”

Does it make any sense for us to be anxious about this in any respect? I requested myself that query as Forall and I sat on a coated porch, ingesting tea and consuming dates filled with almond butter {that a} resident of the monastery wordlessly dropped off for us. We had been listening to birdsong, searching on the Inexperienced Mountains rolling into Canada. Was the world actually ending?

Forall was absolute: 9 international locations are armed with nuclear weapons. Even when we cease the disaster of local weather change, we could have carried out so too late for 1000’s of species and billions of beings. Our democracy is fraying. Our belief in each other is fraying. Most of the very folks creating AI consider it may very well be an existential risk: One 2022 survey requested AI researchers to estimate the likelihood that AI would trigger “extreme disempowerment” or human extinction; the median response was 10 %. The destruction, Forall mentioned, is already right here.

Learn: AI doomerism is a decoy

However different consultants see a unique narrative. Jaron Lanier, one of many inventors of digital actuality, advised me that “giving AI any type of a standing as a correct noun shouldn’t be, strictly talking, in some absolute sense, provably incorrect, however is pragmatically incorrect.” He continued: “Should you consider it as a non-thing, only a collaboration of individuals, you acquire rather a lot when it comes to ideas about methods to make it higher, or methods to handle it, or methods to take care of it. And I say that as any individual who’s very a lot within the heart of the present exercise.”

I requested Forall whether or not he felt there was a threat that he was too hooked up to his personal story about AI. “It’s vital to know that we don’t know what’s going to occur,” he advised me. “It’s additionally vital to take a look at the proof.” He mentioned it was clear we had been on an “accelerating curve,” when it comes to an explosion of intelligence and a cataclysm of loss of life. “I don’t suppose that these techniques will care an excessive amount of about benefiting folks. I simply can’t see why they might, in the identical approach that we don’t care about benefiting most animals. Whereas it’s a story sooner or later, I really feel just like the burden of proof isn’t on me.”

That night, I sat within the Zendo for an hour of silent meditation with the monks. A number of instances throughout my go to to MAPLE, a resident had advised me that the best perception they achieved was throughout an “interview” with Forall: a personal one-on-one educational session, held throughout zazen. “You don’t expertise it elsewhere in life,” one pupil of Forall’s advised me. “For these seconds, these minutes that I’m in there, it’s the solely factor on this planet.”

Towards the very finish of the hour, the top monk referred to as out my title, and I rushed up a rocky path to a smaller, softly lit Zendo, the place Forall sat on a cushion. For quarter-hour, I requested questions and acquired solutions from this unknowable, uncommon mind—not about AI, however about life.

After I returned to the massive Zendo, I used to be stunned to search out the entire different monks nonetheless sitting there, ready for me, meditating at midnight.

Related Posts

- Navigating the World of World Education – Hype Hair

Phot Credit score: Adobe Inventory Written By: Amber Nichols World education is a dynamic strategy…

- World Athletics report card: how did Budapest carry out at World Championships?

The 2023 World Athletics Championships held in Budapest left a long-lasting impression, showcasing outstanding races…

- Spaniard units 100m stiletto world report

Spain’s Christian Roberto López Rodriguez has added to his rising listing of world data by…